Advancing Human-Machine Interface Technologies in Automotive Applications

The ability of the automobile to meet the demands of the consumer is ever changing. As the demand from the consumer increases the integration of the vehicle further into their life, the OEM’s have answered these requirements of the consumer by developing systems to integrate into the vehicle and keep the driver engaged in the vehicle. The needs of the driver have progressed from AM radio to 8 track tape to CD to always connected to the internet. As the demand for increased integration continues the ability of the person to interface with the vehicle is required. The foundation of this evolution lies the Automotive Human-Machine Interface (HMI), which serves as the critical bridge that enables drivers and passengers to communicate with and control the complex systems within the vehicle, as well as connect with the outside world. This interface can encompass all the vital systems within the vehicle and those connecting to the vehicle externally.

History of HMIs

HMIs were initially very rudimentary, relying on analog controls such as knobs and buttons for basic functions like climate control, window operation, and cassette radio. With the introduction of the iPhone in 2007, the consumer started to demand highly graphical and intuitive features that increase interoperability with various devices. As this consumer behavior shifted this compelled car manufacturers to integrate touchscreens and more advanced digital designs, by removing physical buttons which allowed for a deeper automated control. This fundamental change means that automotive brands must now compete not only on traditional engineering merits but also, increasingly, on the quality of their digital experience. As the demand for more intuitive experience the user experience (UX) is vital to acceptance of the change of components. Making HMI user friendly is vital to the integration of it within the lives of the occupants of the vehicle. Easy to understand titles, large easy to maneuver graphical user interface (GUI) is needed so people of all ages and technological backgrounds can select those items they desire.

Integration with the Software Defined Vehicle (SDV)

One of the major evolutions of the automotive industry was the software defined vehicle (SDV). For this vehicle to operate, the ability of it to interact with the driver while also interacting with the external world requires an integrated experience where the driver can complete all of their needs in one interface. To provide this type of connectivity the processing power of the HMI must meet the needs of the SDV network to execute on the desired vehicle outcome. With the increased processing speeds of the automotive ethernet networking, the demand will rise exponentially (Bandur et al., 2021). From gesture control to customization of the vehicle, the HMI is the central unit that allows the human to interact with the machine in an understandable medium. Looking at this through a lens of autonomy, the driver will now further interact with the HMI to provide the vehicle directions on what it desires to do next. This could be from setting a destination to ordering food for delivery, all the while playing your favorite movie.

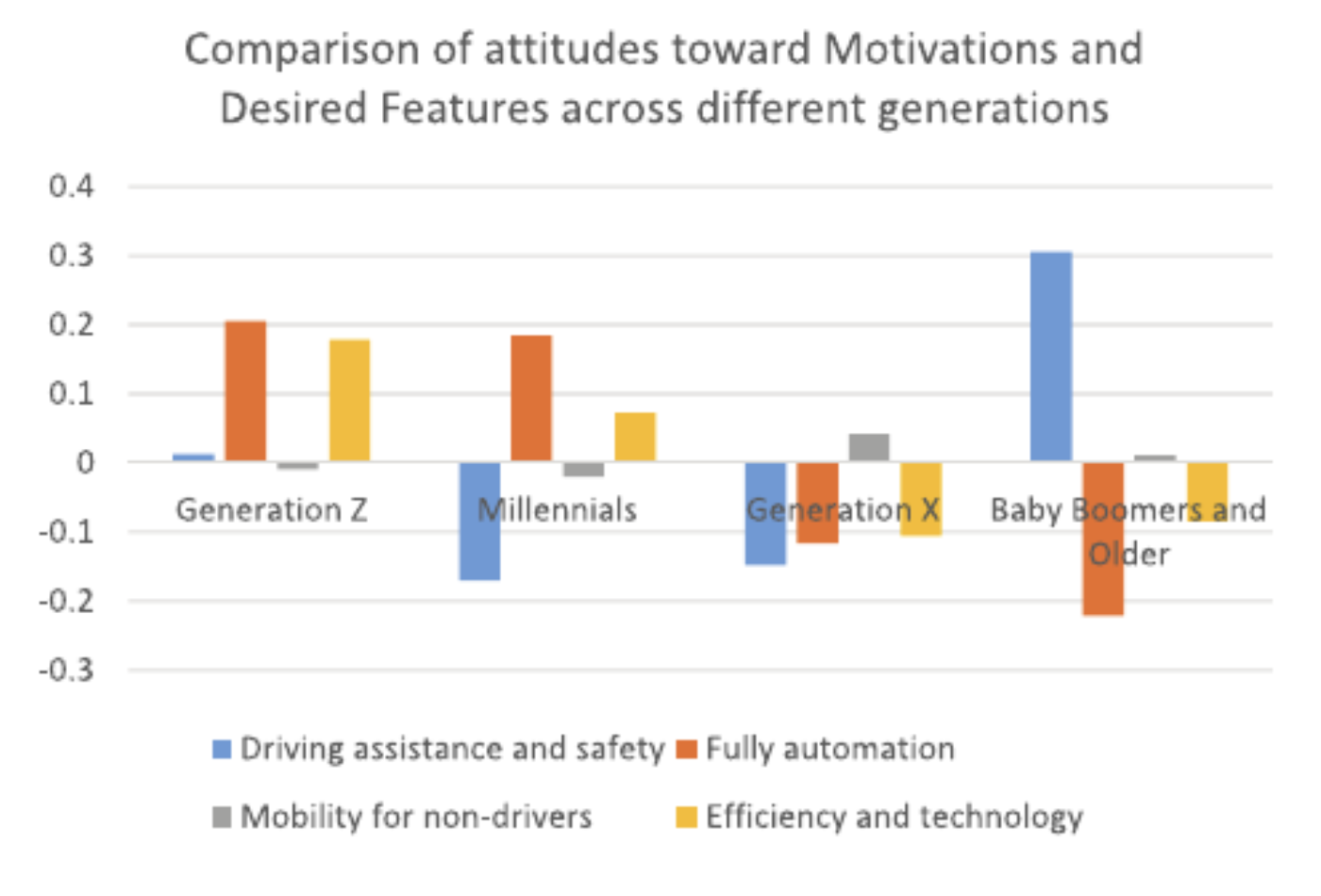

The integration of the vehicle into the daily lives of the Generation Z and Millennials is changing the way the vehicle is built, equipped and deployed. When looking at the attitudes towards motivation to use a vehicle and desired features of that vehicle, Gen Z and Millennials demand more technology related features (Asgari et al., 2023). As they become the largest two population cohorts, the OEMs will continue to build features to appeal to their desires. With this increased technology and connectedness, the conversation comes to security of information.

Security

With the feature laden interfaces, the ability to access the content securely is vital to people adopting the technology to be fully without worrying about their information getting compromised. As the personalization of the vehicle continues to increase the features will continue to become more immersive and meet those desires of the driver. Input devices on HMI’s are becoming increasingly more intuitive to the point we are utilizing brain wave level input features to control the vehicle without physical input (Shishavan et al., 2024). Bio metric authentication to voice recognition, the ability to operate the vehicle of tomorrow without the proper bio markers may cause the vehicle to deny you entry. The amount of personal information that is being collected and stored on vehicle is increasing with each new system adopted which is just another area for exploitation. The technician is now going to have to be able to understand how this affects the operational systems and if one of these pieces could cause the failure they are trying to diagnose.

AI and Machine Learning

Artificial intelligence (AI) and machine learning will enable HMIs to become increasingly context-aware and predictive. Being able to change the vehicle’s output based on the context of the situation is setting up the vehicle to predict the demands of the driver before they require assistance. The idea of a “smart cabin” provides for a highly intuitive cabin that can respond to the needs of the occupants. This includes features like integrated health monitoring, dynamic ambiance control (lighting, sound, temperature, scent), and adaptive seating configurations. Providing a situation where the vehicle cares for the occupants more than just protecting them in case of a collision will further embed the vehicle into the mindset of the users.

With all this monitoring of human behavior inside the ability of the AI to utilize Machine Learning (ML) to anticipate reactions and preferences will further create an immersive experience. These features could present the ability to control operation of the vehicle if the human is having health issues, drunk or sick. The ability to recognize differing human situations is increasing, which is transitioning the control of the vehicle from the person to the machine. Working in tandem with the machine the human must be cognizant of how they are acting in and around the wheel to get the expected outcome of the driving experience. This could help with lowering the possibility of a collision either because of an impaired driver or a distracted one. The next logical phase of this development is connecting the person at a higher level and not relying on the physical reacting time of the person.

Brain-Machine Interfaces

The next evolution of human-vehicle interfacing is Brain-Machine Interfaces (BMI). BMI utilizes a heads-up display (HUD) to provide the driver with visual stimuli that they can then react to with movement of their eyes (Shishavan et al., 2024). The ability of the driver to change climate control, radio stations, map destinations and other items without removing their attention from the vehicle in front of them should decrease the possibility of an accident. Incorporating this with deep learning algorithms and artificial intelligence, the ability of the vehicle to work as an extension of the individual is increasingly becoming a reality (Bellotti et al., 2019). This interface also bodes well for the inclusion of augmented reality and virtual reality interfaces. As investigation in brain signals continues the ability to understand what the person wants to happen by receiving that information through an electrical signal will increasingly provide for faster decisions (Singh & Kumar, 2021). The lag time between seeing the situation, determining the proper response and then implementing response, is the difference between hitting the pedestrian and swerving to miss them. This could also result in issues with individuals that have abnormal brain electron operations as those could confuse the vehicles’ calibration software. Building safeguards to minimize the impact of nefarious thoughts or knee jerk reactions to stimuli will continually have to be developed.

Conclusion

The automotive technician is becoming more than just a mechanical and electrical professional diagnostician. They now are venturing into the realm of biomedical human interfacing. This increase in skill sets and understanding is requiring the technician to become even further intertwined with the human-machine interface in more aspects than before. Continual expansion of knowledge will become even more important as this is the dawning of a new paradigm of understanding. Integrating AI and ML into the HMI will further embed the person within the operation of the vehicle. A fully autonomous vehicle, controlled by the thoughts of the person in the vehicle, is closer to reality and any sci-fi movie ever thought possible.

As with everything that moves, the technician must be able to understand the operation of the system and be able to diagnose those failures to repair it. Gone are the days of a technician that could just touch the vehicle or hear the engine missing a beat. Now they must transition to the software/electrical technician that must understand how each of these systems operate independently and dependently on each other. Often times the solution is a simple electrical or software error that is obvious to a trained person looking at the situation. Understanding how each piece looks and works with the other pieces will require the technician to further develop their skill sets to ones they never thought they would need. Simplifying complex systems into their basic structures will provide the technician with a quick and complete diagnostic plan for any system. Teaching and understanding technology is vital to further technician development.

The MAST series from CDX Learning Systems provides the instructor with pointed material to exceed the requirements of any ASE training currently on the market. Utilizing the Read-See-Do model throughout the series, the student has various learning modalities present throughout the products which allow them to pick the way they learn the best. From developing simulations on cutting edge topics to providing a depth of automotive technical background, CDX has a commitment to making sure instructors and students have the relevant training material to further hone their skill sets within the mechanical, electrical and software driven repair industry. CDX Learning Systems offers a growing library of automotive content that brings highly technical content to the classroom to keep you and your students up to date on what is currently happening within the Mobility Industry. Check out our Light Duty Hybrid and Electric Vehicles, along with our complete catalog Here.

About the Author

Nicholas Goodnight, PhD is an Advanced Level Certified ASE Master Automotive and Truck Technician and an Instructor at Ivy Tech Community College. With over 25 years of industry experience, he brings his passion and expertise to teaching college students the workplace skills they need on the job. For the last several years, Dr. Goodnight has taught in his local community of Fort Wayne and enjoys helping others succeed in their desire to become automotive technicians. He is also the author of many CDX Learning Systems textbooks, including Light Duty Hybrid and Electric Vehicles (2023), Automotive Engine Performance (2020), Automotive Braking Systems (2019), and Automotive Engine Repair (2018).

Related Content

Automotive Night Vision and Thermal Sensing: An Introduction to Advanced Technology

How Connected Vehicles Are Reshaping the Automotive Industry

The Influence of AI on the Future of Automotive Technology Development

References

Asgari, H., Gupta, R., & Jin, X. (2023). Millennials and automated mobility: exploring the role of generation and attitudes on AV adoption and willingness-to-pay. Transportation Letters, 15(8), 871–888. https://doi.org/10.1080/19427867.2022.2111901

Bandur, V., Selim, G., Pantelic, V., & Lawford, M. (2021). Making the Case for Centralized Automotive E/E Architectures. IEEE Transactions on Vehicular Technology, 70(2), 1230–1245. https://doi.org/10.1109/TVT.2021.3054934

Bellotti, A., Antopolskiy, S., Marchenkova, A., Colucciello, A., Avanzini, P., Vecchiato, G., Ambeck-Madsen, J., & Ascari, L. (2019). Brain-based control of car infotainment. Conference Proceedings - IEEE International Conference on Systems, Man and Cybernetics, 2019-October, 2166–2173. https://doi.org/10.1109/SMC.2019.8914448

Shishavan, H. H., Behzadi, M. M., Lohan, D. J., Dede, E. M., & Kim, I. (2024). Closed-Loop Brain Machine Interface System for In-Vehicle Function Controls Using Head-Up Display and Deep Learning Algorithm. IEEE Transactions on Intelligent Transportation Systems, 25(7), 6594–6603. https://doi.org/10.1109/TITS.2023.3345855

Singh, H. P., & Kumar, P. (2021). Developments in the human machine interface technologies and their applications: a review. In Journal of Medical Engineering and Technology (Vol. 45, Issue 7, pp. 552–573). Taylor and Francis Ltd. https://doi.org/10.1080/03091902.2021.1936237