Teaching ADAS: Navigating the Future of Vehicle Safety and Technology

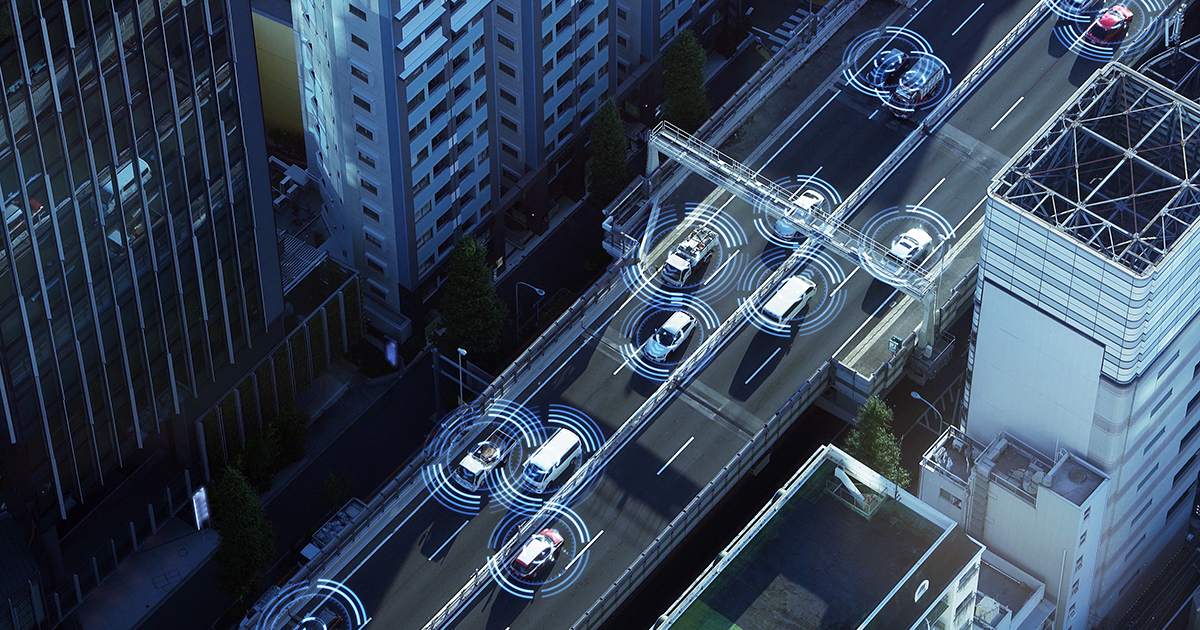

The automotive industry is experiencing a paradigm shift with the introduction of Advanced Driver Assistance Systems (ADAS). ADAS encompasses a range of electronic technologies and components designed to assist drivers in operating their vehicles safely and efficiently. This increase in complexity requires technicians to understand how these various sensors and systems interact with the vehicle as it moves down the road.

Increasing the use of technology within vehicle operation is decreasing the driver distracted driving. Keeping the vehicle on the road away from other vehicles as it travels saves lives and increases operational efficiencies. With the first speed control device called the “SpeedoStat” that was patented in 1950 and used on the 1958 Chrysler Imperial, the quest for driverless control of vehicle speed was born (Sears, 2018).

Allowing the driver to concentrate on just steering the vehicle has been a common item added to vehicles throughout the 21st century. As technology progressed, the next evolution of vehicle assisted control is a version of autonomous drive operation. Humans behind the wheel of a vehicle increase the possibility of an accident to the tune of over 5 million collisions in 2020. Mitigating this large number of collisions will help to save lives and lower insurance costs for the average consumer. ADAS was implemented to help with developing a safer roadway.

ADAS Components

The ability of the vehicle to control its own operation has been steadily building throughout the years as OEM’s have decided to provide the drivers with more technology to make the driver’s experience more enjoyable. As this technology has been implemented the vehicle’s sensors and systems have been integrated into the vehicle to make control of the vehicle systems more integral to vehicle operations. One of the major issues with controlling a moving object is the fact that the other objects near it are not always stationary. This system must be able to adapt to the changing depth, speed, and size of these other objects to navigate around them. All these inputs must be correlated to each other through a concept known as sensor fusion. Sensor fusion is the simple process done within the ECM to determine the vehicle's position in relation to other vehicles and obstacles in the vehicle's path. To gain awareness of position within the roadway, the vehicle must be able to determine what is currently happening so it can move to adjust its path. For this to happen the vehicle is equipped with a few different types of sensors and cameras that can be used to gain information which the ECM’s on the vehicle can use to make adjustments. These sensors are broken down into the following types.

- Radar Sensors: These sensors use radio waves to detect objects, measure their distance, and determine their velocity. Sending out these radio waves at a high frequency will create an image that is reflected back to the sensor so it can identify if there is an obstacle within the roadway. Utilizing varying different MHz output allows the different types of radar to work in different situations. Designing these systems to meet the needs of the vehicle, when combined with the various other sensors on the vehicle allow the ECM to see ahead of the vehicle even in the most trying situations. Picking up obstacles quickly is key to adjusting the operational direction of the vehicle before a collision occurs.

- Cameras: Vision-based systems analyze images and video streams to identify lane markings, traffic signs, and objects in the vehicle's path. As the camera picks up objects on the road the computing power on the vehicle must be able to process this in a very quick fashion. This sensor is usually mounted up high behind the windshield, so it has an unobstructed view of the roadway. As the camera takes in images it determines the differences between road markings, road signs with Optical Character Recognition (OCR) and cross checks this with other vehicle systems to determine where the vehicle will need to be directed to minimize possibility of running off the road or hitting an obstacle.

- LiDAR Sensors: Light Detection and Ranging (LiDAR) sensors employ laser beams to measure distances and create detailed 3D maps of the environment. As the 3D map is created, it allows the ECM to determine depth and other items that a normal eye can decipher. Utilizing this input with Machine Learning, the ECM can convert this information into real time 3 dimensional maps of the area in front of the vehicle. These sensors can be categorized into two types, Electro-Mechanical and Solid-State LiDAR. The electro-mechanical type is more of a first-generation type of sensor that has a spinning component that allows for a long-range radar image. Solid state LiDAR are built on a single chip and are far more compact which lends themselves to be integrated more into automotive applications. The solid-state LiDAR sensors send out light in a specific pattern, when it bounces back to the sensor it can generate an image based on what does and does not return. LiDAR is not as affected by the environment as much as cameras in the system.

- Ultrasonic Sensors: These sensors use sound waves to detect proximity and assist in parking, blind-spot monitoring, and obstacle detection. The use of ultrasonics is easily implemented in bumper covers and other low height components. These sensors are very low power and usually are very accurate when close to obstacles. They are sometimes called Proximity Sensors and are usually 3–5-foot range between the sensor and the obstacle. Some OEM’s no longer rely on ultrasonic sensors as they employ Tesla Vision ™©.

- Global Positioning System (GPS) Global Navigation Satellite System (GNSS): Global positioning systems allow the vehicle to use them as reference points to determine where the vehicle is on the earth. This is the primary feature that allows for navigation either autonomously or with the driver in control. The satellite network provides up to 24 markers around the globe that allows for a receiver to triangulate its position based on which ones it can pick up. GNSS is the generic term for satellite navigation systems as there are 6 primary constellations fielded by 5 different countries and the European Union (Novatel, 2024). Correlating the vehicle's position with satellite navigation allows them to move in the direction and at the proper speed required for the situation.

ADAS and autonomous operation within a roadway requires a mesh of variety of sensors to provide information to make safe vehicle operation occur. Utilizing these types of sensors allows for independent operation of Electronic Power Steering (EPS), Adaptive Cruise Control (ACC), Blind Spot Detection (BSD), Forward Collision Warning (FCW), Lane Keep Assist (LKA) and other vehicle systems. Diagnosing these systems through an understanding of how each sensor is providing an input and then determining the proper output will allow the technician to diagnose it effectively. ASE has gone as far as to implement the L4 which focuses on understanding and diagnosing ADAS related issues. ASE L4 Including this type of training within your Automotive curriculum is key to keeping it relevant within the work of education. CDX Learning systems is constantly developing new content to adjust to the new normal within the automotive repair field. ADAS and autonomous technologies are the next evolution within the automotive realm.

The MAST Series of CDX provides the instructor pointed material to exceed the requirements of any ASE training currently on the market. Utilizing the Read-See-Do model throughout the series, the student has various learning modalities present throughout the products which allows them to pick the way they learn the best.

About the Author:

Nicholas Goodnight, PhD is an ASE Master Certified Automotive and Truck Technician and an Instructor at Ivy Tech Community College. With nearly 20 years of industry experience, he brings his passion and expertise to teaching college students the workplace skills they need on the job. For the last several years, Dr. Goodnight has taught in his local community of Fort Wayne and enjoys helping others succeed in their desire to become automotive technicians.